eMagiz Event Streaming

This microlearning provides a comprehensive overview of event streaming, covering topics such as topics, retention, replication, reading data from a topic, consumer groups, and the event streaming broker. It explains key concepts such as publish-subscribe, topics, retention hours, retention bytes, and replication, making it an excellent resource for anyone looking to understand event streaming in depth. The text delves into technical details from a conceptual perspective, making it valuable for both beginners and experienced professionals in the field of event streaming.

Should you have any questions, please get in touch with academy@emagiz.com.

1. Prerequisites

- Some context on Event Streaming will be helpful.

2. Key concepts

All concepts are discussed in the section below.

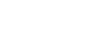

3. Introducing Event Streaming

Event streaming is the concept whereby systems can produce and consume data from a single location. In the integration world, this pattern is called Publish-Subscribe (or PubSub). The single place is called a topic. The Publisher is often called a Producer and a Subscriber, a Consumer. The key is that a topic may contain multiple Publishers of the same data and may contain more Subscribers of the same data. Consumers are subscribed to a topic and will receive a notification that triggers a read action. The core focus of Event Streaming is high volume and high speed.

3.1 Topics

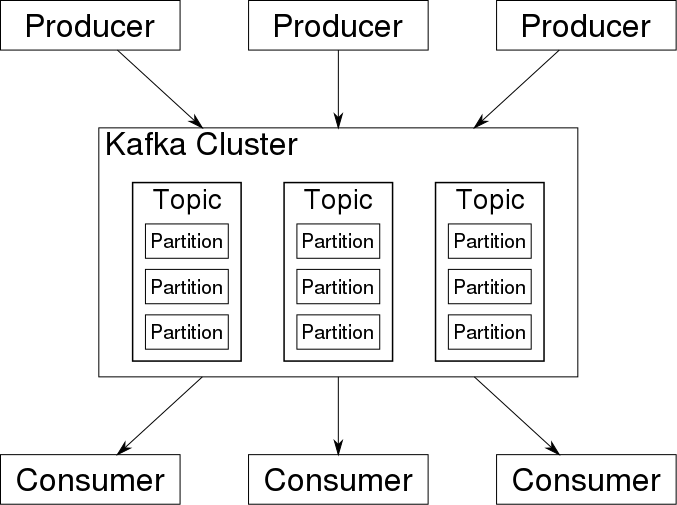

Topics are the things where the message or data packets are stored. It is not a conventional database and uses a log-based approach to store data. Each new data packet is put on top of the stack, and an offset indicator is added, which helps consumers understand which message is read or which is not. The data on the topic can be spread in multiple partitions, the smallest technical unit into which a data packet is written. Having various partitions makes it possible to read /write data at ultra-high speed by Consumers/Producers.

Data in topics are focused on storing small events no bigger than 1 Mb per data packet/message.

3.2 Retention

Retention is an essential concept that allows for the deletion of messages from the topic in an organized manner. The key is that data should be kept on the topic for a limited time or size.

3.2.1 Retention Hours

Retention Hours are the number of hours data can reside on the topic before the FiFo principle of removing the first entry in the log kicks in. The moment data is still on the topic beyond this threshold, the topic will automatically start deleting it. The default setting eMagiz provides is 48 hours (2 days).

3.3 Replication

The default replication mechanism is that the data will be spread across three different nodes so that no data is lost, high availability is ensured, and high performance is guaranteed. Event Streaming distributes the partitions of a particular topic across multiple brokers. By doing so, we'll get the following benefits.

- If we put all partitions of a topic in a single broker, the broker's IO throughput will constrain its scalability. A topic will never get bigger than the biggest machine in the cluster. By spreading partitions across multiple brokers, a single topic can be scaled horizontally to provide performance far beyond a single broker's ability.

- Multiple consumers can consume a single topic in parallel. Serving all partitions from a single broker limits the number of consumers it can support, while partitions on numerous brokers enable more consumers.

- Multiple instances of the same consumer can connect to partitions on different brokers, allowing very high message processing throughput. Each consumer instance will be served by one partition, ensuring each record has a clear processing owner.

3.4 Reading data from a topic

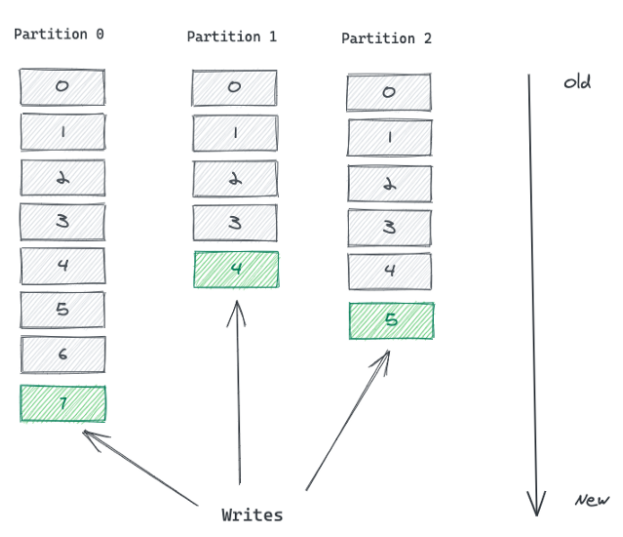

Consumers can read data from a topic at their own pace and availability. Consumers need to come and get the data. The offset of a message works as a consumer-side cursor at this point. The consumer keeps track of which messages it has already consumed by keeping track of the offset of messages. After reading a message, the consumer advances its cursor to the next offset in the partition and continues. Advancing and remembering the last read offset within a partition is the consumer's responsibility. The broker has nothing to do with it.

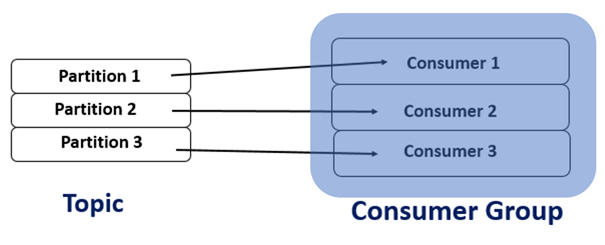

3.4.1 Consumer Groups

Technically speaking, the Event Streaming protocol works with Consumer Groups of one or more consumers. When a consumer group has multiple consumers, each consumer within the group can be assigned to a specific partition of the topic. This ensures the efficiency and throughput of messages on the topic.

The following concepts are particularly of interest when talking about consumer groups.

- All the Consumers in a group have the *same* group.id.

- Only one consumer reads each partition in the topic.

- The maximum number of Consumers equals the number of partitions in the topic.

- If there are more consumers than partitions, then some of the consumers will remain idle.

- A Consumer can read from more than one partition.

3.4 The Event Streaming Broker

The Event Streaming Broker holds the technical infrastructure to manage all these topics, Producers/Consumers associated with them, and many more. The Kafka framework is used inside the eMagiz Event Streaming to enable this technology piece. The entire Event Streaming Broker is part of the eMagiz platform and fully managed from the platform.

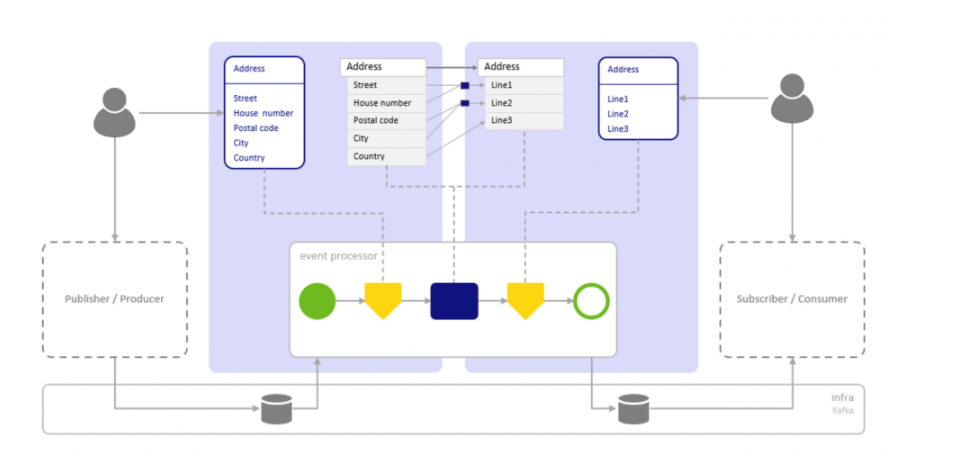

3.5 Event Processing

eMagiz reuses the transformation capability to allow message transformation. In this pattern, messages are transformed into special-type flows called Event processors. The Event processor uses a topic in and a topic out, which means that data is transported from one topic to another. On each topic, you can define an event data model.

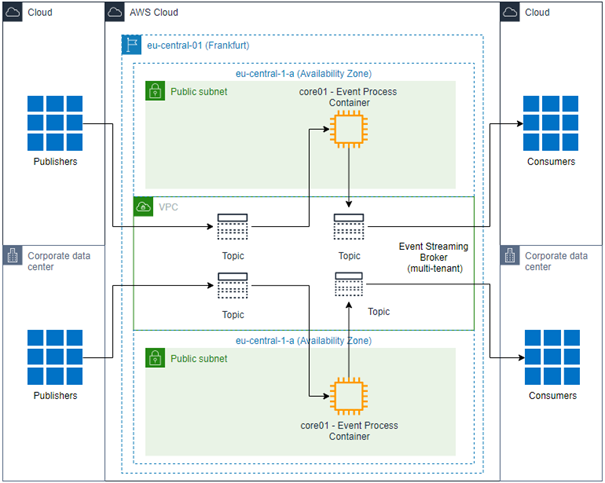

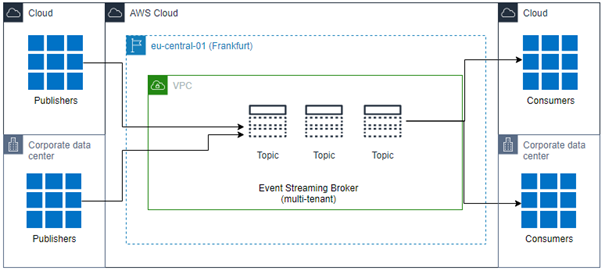

3.6 Architectural components of Event Streaming in eMagiz

A simplified picture below is a list to illustrate the overall architecture of Event Streaming in eMagiz. The first picture is the situation where there is a multi-tenant Event Broker, and both clients are using Event Processing. Note that your solution can have producers running in the cloud and consumers on-premise or vice-versa. The lines drawn in the picture below are meant as a reference to illustrate how the event processing part works on an architectural level.

The picture below shows clients using the Event Broker as a passthrough (i.e., there is no event processing), so there is no need for an Event Streaming container and JMS.

3.6.1 Event Broker

eMagiz is hosting an Event Broker inside the eMagiz Cloud, accessible only via the eMagiz platform. All traffic is routed via the eMagiz platform and is protected by 2-way SSL. The broker holds the specific Kafka-based technology for managing topics, Access Control Lists (ACL), users, retention, and many more—all components as described in the introduction section of this Fundamental. The broker will respond to requests made from the eMagiz platform, such as putting a message on a topic.

3.6.2 JMS and Event Streaming container

When specific flows are deployed for event processing, the regular JMS server will control and manage the traffic. The Event Streaming Container will hold the required flows for Event Processors. The Core machine is not accessible from outside the VPC *, as usual for the eMagiz core machine.

3.6.3 Users

Users will have access to produce and consume messages. Users are managed in the User Management sections in the eMagiz Portal. Once configured, the credentials are accessible via the Event Catalog. Users can access the topics via SSL and certificates. The relevant certificates are available in the Event Catalog, along with all the required details of the Event Broker to allow access. Other access methods, such as Basic Authentication or SASL-like options, are not supported to access the Event Broker.

4. Key takeaways

- Event Streaming provides a means for maximum decoupling of producing and consuming systems

- Event Streaming is an asynchronous pattern by default

- Event Streaming can be used for significant volume events but can als play a role in hybrid integration scenario's