Using eMagiz as a consumer

In this microlearning, we will explore how to effectively use eMagiz as a consumer in the context of Event Streaming. We will guide you through the process of setting up eMagiz to consume and process data from specific topics within our DSH Kafka cluster. Whether you're integrating systems or setting up event processors, this guide will walk you through the essential steps and components needed to get started.

Should you have any questions, please contact academy@emagiz.com.

1. Prerequisites

- Basic knowledge of the eMagiz platform

- Kafka DSH cluster you can use to test against.

- Event streaming license activated

2. Key concepts

This microlearning centers around using eMagiz as a consumer.

- By using, in this context, we mean: Being able to consume data from topics that are managed within an external Kafka Cluster, such as the eMagiz Kafka Cluster running on DSH.

- By knowing how you can easily use eMagiz as a consumer in these situations, you can create event processors or create hybrid configurations (e.g., messaging to event streaming combination).

In this microlearning, we will focus on the components in eMagiz that you need to produce data on a specific topic.

3. Using eMagiz as a consumer

When integrating several systems, it can quickly happen that a subset of those systems can only be reached via more traditional patterns. In contrast, others can write and/or read data directly on a topic. In this microlearning, we will focus on the scenario where an external party writes data to a topic. eMagiz will, in turn, consume data from the topic and distribute it to several legacy systems using specific components.

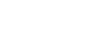

To make this work, you need to identify the producing system, the consuming system (eMagiz), and create a messaging onramp that ensures the messaging pattern is followed towards the legacy systems. In the picture shown below, we have illustrated this in the Capture phase of eMagiz.

To complete the picture, we need to ensure that the data is sent to a legacy system. Something along these lines:

In the Design phase, you can configure your integration as explained in various of our previous microlearnings. Therefore, we will assume that you designed your integration as you had intended.

3.1 View solution in Create

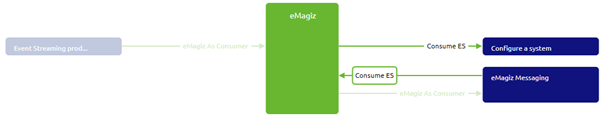

The first step after adding the integrations to Create is to see how eMagiz represents this depiction of reality in the Create phase. See below for the result of the Event Streaming part and the Messaging part:

As learned in previous microlearnings, the Event Streaming part, in this case, the creation of the topic, is done automatically by eMagiz. We will zoom in on how you can set up your messaging entry flow to consume data from the topic that eMagiz automatically creates.

3.2 Consume data

When you open your entry flow, you will see that eMagiz has autogenerated some components for you.

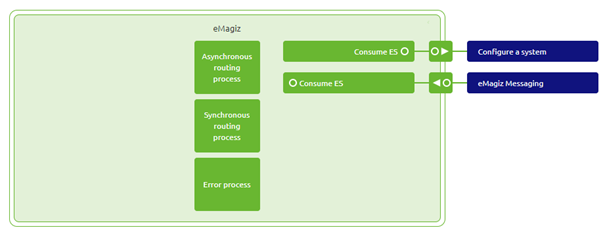

Now we need to ensure that we can consume data from the topic using the eMagiz components available in the eMagiz Flow Designer. In this case, we will need the Kafka message driven channel adapter.

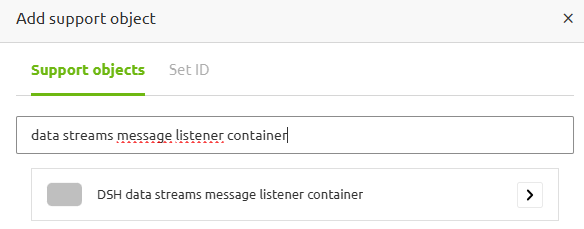

As the name suggests, this component waits for messages to arrive at the Kafka topic, and when they do, it will consume those messages. Secondly, we need a support object that will establish the connection between the eMagiz flow and the eMagiz Event Streaming cluster, which hosts the topic. This component is referred to as the DSH data streams message listener container.

3.3 Configure the support object

In this section, we will discuss all configuration options for the necessary support object.

3.3.1 Bootstrap server URL

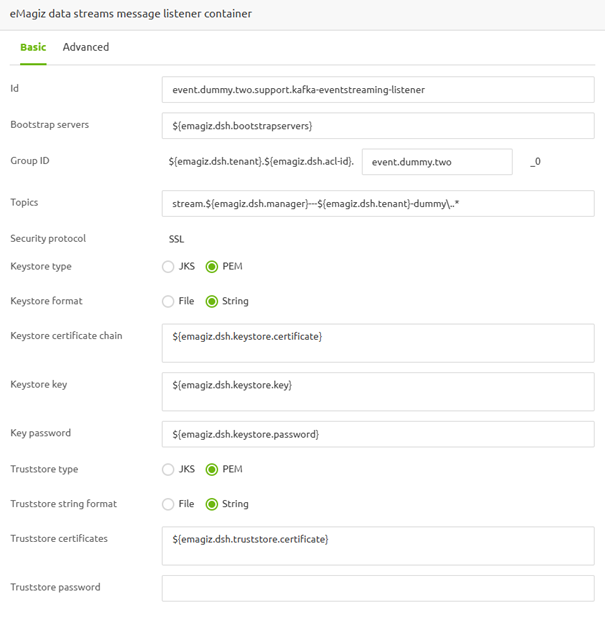

Within this component, you need to fill in several information elements. The first element is the Bootstrap server URL. The bootstrap server URL is registered via a standard property that is built up as follows: ${emagiz.dsh.bootstrapservers}.

3.3.2 Group ID

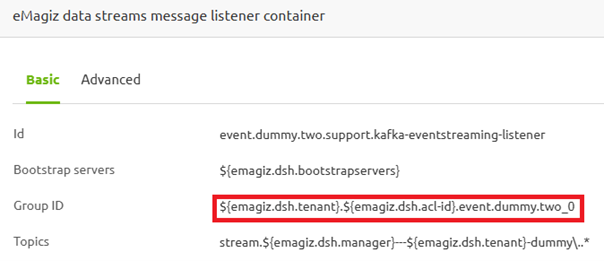

Alongside the bootstrap server URL, you will need to specify the group ID. Note that only the part that can be anything is editable for a user. In here, you can use any name you want. The advice is to give it a descriptive name that is recognizable later on. For our event processors, we use the following naming: event.{technical name input topic}.{technical name output topic}.

3.3.3 Topic Name

The topic name is particular in nature and therefore needs to be matched correctly. Most is auto-generated by eMagiz once more. The only thing you should alter here is the topic name, which is "dummy" in this example. In this case, the suffix is especially important to avoid problems in consuming data.

3.3.3 SSL Configuration

Last but not least, on the Basic tab, you need to specify the SSL-related information that allows your eMagiz flow to authenticate itself with the DSH broker. These settings must be completed as illustrated in the picture below. Notice that all relevant information is stored in auto-generated properties for you. These properties are as follows.

- ${emagiz.dsh.keystore.certificate}

- ${emagiz.dsh.keystore.key}

- ${emagiz.dsh.keystore.password}

- ${emagiz.dsh.truststore.certificate}

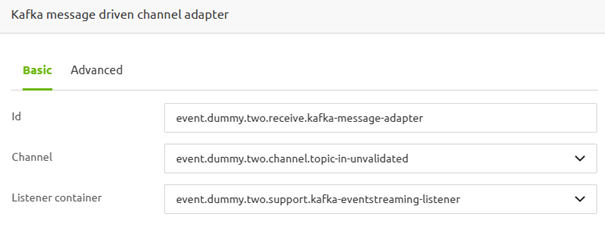

3.4 Configure the outbound component

Now that we have the support object configured, we can fill in the details for our Kafka message-driven channel adapter. Here, you select the output channel and link the support object to this component. The result should be similar to this:

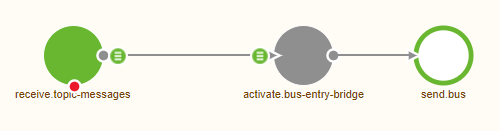

As a result, your flow should look similar to what is shown below:

3.4 Managing Consumer groups

In the Kafka Message Listener component, you will find a specific setting to identify the Group ID of this flow. A Group ID identifies a consumer group. A consumer group can have more than one consumer that shares the offset of the message consumed from that topic. In the case where a flow is duplicated for fail-over purposes, the group ID ensures that each flow reads the message that belongs to the latest offset (so that messages don't get read twice). If consumers need to read each message for a different usage or business process, ensure that these Group IDs are distinct (each group ID has its offset).

4. Key takeaways

- Utilizing eMagiz as a consumer is particularly useful in hybrid scenarios where both traditional messaging and event streaming are involved. In simpler cases, eMagiz automates much of the process for you.

- You can use the copy and paste functionality of eMagiz to give you a head start, reducing manual configuration

- A lot of auto-generated properties of eMagiz are necessary to set up the connection correctly, so ensure that you use the correct property placeholders.

- Ensure your eMagiz flow is correctly connected to the eMagiz Event Streaming cluster and configured with the necessary SSL connections to enable smooth data production.

- Properly manage consumer groups to ensure message offsets are handled correctly, avoiding duplicate reads and ensuring each consumer group gets the appropriate messages.

5. Suggested Additional Readings

If you are interested in this topic and want more information on it, please see the following links: