Using Mendix as consumer

In this microlearning, we will focus on how you can utilize the eMagiz Connector, available via the portal, to consume data from topics managed within the eMagiz Kafka Cluster. By the end, you'll have a clear understanding of setting up connections, configuring consumers, and utilizing the eMagiz Connector to streamline data transportation between systems.

Should you have any questions, please get in touch with us at academy@emagiz.com.

1. Prerequisites

- Basic knowledge of the eMagiz platform

- Basic knowledge of the Mendix platform

- Mendix project in which you can test this functionality

- A Kafka cluster to which you can connect.

- Access to the Deploy phase of your eMagiz project

- A connection between Mendix and eMagiz Designed, Created, and in the active release

- Using the "eMagiz Connector" in the Mendix Marketplace.

- Initial installation done.

- Initial configuration done.

2. Key concepts

This microlearning focuses on using the eMagiz Connector in Mendix.

- By using, in this context, we mean: Being able to consume data from topics that are managed within the eMagiz Kafka Cluster.

- By knowing how you can easily set up Mendix to consume data from topics, you have the option to better transport large volumes of data between several systems (i.e., two Mendix applications).

Some key concepts before you dive further into this microlearning:

- Consuming data from a topic means that the external system, in this case, Mendix, reads data from a pre-defined topic where the data is stored temporarily.

3. Using the eMagiz Connector Module

With the help of the Mendix module (called eMagiz Connector) created by the eMagiz team, you can easily connect between Mendix and eMagiz for data integration. This microlearning will focus on consuming data via a connection to the eMagiz Kafka Cluster. The previos microlearning focused on producing data.

To use the eMagiz Connector in Mendix, you need to be able to do at least the following:

- Set up a connection to the external Kafka Cluster (i.e., the eMagiz Kafka Cluster) from Mendix

- Configure a consumer that can read (listen) data from a topic

Once you have configured these steps, consider how you want to transfer data from and to your data model. That part is excluded from this microlearning, as it focuses solely on how to build microflows in Mendix.

In the remainder of this microlearning, we will discuss the options through which you can functionally receive messages utilizing the eMagiz Kafka cluster.

3.1 Consuming Messages - "String Variant"

To receive data in Mendix, you must define several configuration options in the Mendix project. In this example, we will examine a simple configuration using a string variable to represent the payload. Later, we examine the variant using a Mendix object as the starting point for receiving data.

In eMagiz, you will see a flow in Create that you cannot edit, as the autogenerated functionality provided by eMagiz is all that is needed. Once done, ensure the flow is transported to Deploy and is ready to be used in a Mendix application by adding it to a release.

In parallel, the Mendix application must be developed to handle the consumption of a message and the ability to receive it from the eMagiz Kafka cluster, whether earlier or later.

3.1.1 Startup action

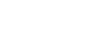

In Mendix, you must add the "StartStringConsumer" Java Action to the after startup flow. The most important configuration items are as follows.

- The destination (i.e., the topic from which you want to consume the request messages). This can be supplied by the eMagiz developer or found under Deploy -> Mendix Connectors should you have access to the eMagiz model in question.

- Validate message. This determines whether the incoming message is validated before it can be processed by the microflow that handles the incoming message.

- The microflow that handles the incoming message.

- Auto offset reset option. This defaults to the advised setting (i.e. Latest) and is important to consider. This setting determines where the consumer group will start once it is connected for the first time to the broker.

3.2 Consuming Messages - "Object Variant"

To receive data in Mendix, you must define several configuration options in the Mendix project. In this example, we will examine the complex configuration using a Mendix object in conjunction with an export mapping that represents the payload.

In eMagiz, you will see a flow in Create that you cannot edit, as the autogenerated functionality provided by eMagiz is all that is needed. Once done, ensure the flow is transported to Deploy and is ready to be used in a Mendix application by adding it to a release.

In parallel, the Mendix application must be developed to handle the consumption of a message and the ability to receive it from the eMagiz Kafka cluster, whether earlier or later.

3.1.1 Startup action

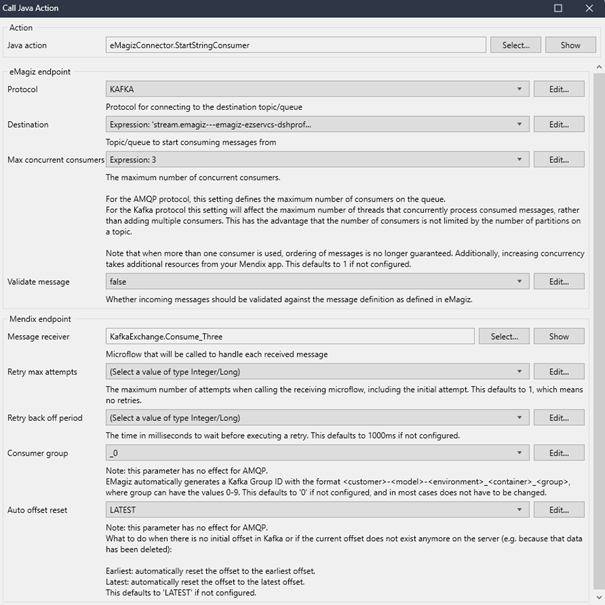

In Mendix, you must add the "StartMessageConsumer" Java Action to the after startup flow. The most important configuration items are as follows.

- The destination (i.e., the topic from which you want to consume the messages). This can be supplied by the eMagiz developer, found under Deploy -> Mendix Connectors, should you have access to the eMagiz model in question, or viewed in the eMagiz Catalog.

- The import mapping (the mapping of the incoming JSON or XML payload to your data entities). The definition of the JSON/XML can be determined by the eMagiz developer (and then shared with the Mendix developer) or determined by the Mendix developer (and then shared with the eMagiz developer).

- Validate message. This determines whether the incoming message is validated before it can be processed by the microflow that handles the incoming message.

- The microflow that handles the incoming message.

- Auto offset reset option. This defaults to the advised setting (i.e. Latest) and is important to consider. This setting determines where the consumer group will start once it is connected for the first time to the broker.

3.3 Execute microflow

When processing messages you have access to the message key of the message, which can be utilized as input parameter by using the name "Key" and selecting the option "String". Subsequently you can define a secondary input parameter called "Payload" that contains the payload. This is either a "String" or a reference to the Object that is used in the import mapping.

4. Key takeaways

To listen to a topic in Mendix, you need the following:

- The destination (i.e., the topic from which you want to retrieve the information)

- Execute microflow (the microflow that will process the data that is received in Mendix)

- Optionally, you can define an import mapping and subsequent options.

5. Suggested Additional Readings

If you are interested in this topic and want more information on it, please see the following links: